Biological synapses effortlessly balance memory retention and flexibility, yet artificial neural networks still struggle with the extremes of catastrophic forgetting and catastrophic remembering. Here, we introduce Metaplasticity from Synaptic Uncertainty (MESU), a Bayesian update rule that scales each parameter’s learning by its uncertainty, enabling a principled combination of learning and forgetting without explicit task boundaries. MESU also provides epistemic uncertainty estimates for robust out-of-distribution detection; the main computational cost is weight sampling to compute predictive statistics. Across image-classification benchmarks, MESU mitigates forgetting while maintaining plasticity. On 200 sequential Permuted-MNIST tasks, it surpasses established synaptic-consolidation methods in final accuracy, ability to learn late tasks, and out-of-distribution data detection. In task-incremental CIFAR-100, MESU consistently outperforms conventional training techniques due to its boundary-free streaming formulation. Theoretically, MESU connects metaplasticity, Bayesian inference, and Hessian-based regularization. Together, these results provide a biologically inspired route to robust, perpetual learning.

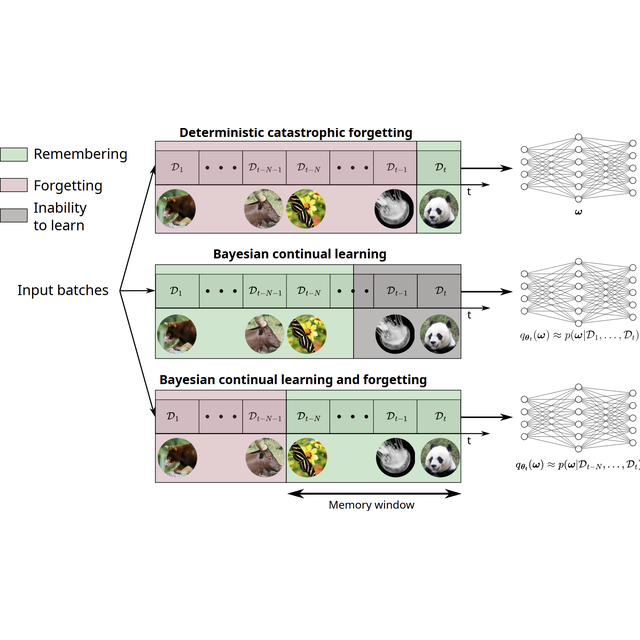

The human brain continuously learns from experience and retains past knowledge, a balance that artificial intelligence (AI) systems still struggle to achieve. When AI models learn new information, they often overwrite what they previously knew (a problem known as catastrophic forgetting) or, conversely, become too rigid to adapt to new data (catastrophic remembering).

To address this challenge, we drew inspiration from neuroscience, where recent theories suggest that biological synapses may follow Bayesian principles: updating the belief of the neural network about the world by weighting new evidence against prior knowledge and tracking how uncertain they are about each belief. Building on this idea, we introduce a new AI framework for lifelong learning called Metaplasticity from Synaptic Uncertainty (MESU). In MESU, each connection in the network behaves like a “Bayesian synapse,” maintaining its own uncertainty estimate, adjusting how quickly it learns based on that confidence and integrating a controlled forgetting mechanism on low-relevance data. MESU mirrors hypotheses on how the brain might manage memory stability and flexibility.

Our experiments show that MESU achieves a strong balance between remembering and adapting. Across multiple benchmarks, including animal image classification, permuted digit classification, and incremental object classification scenarios, MESU consistently reduces both catastrophic forgetting and remembering, while maintaining reliable measures of uncertainty. It outperforms widely used continual learning methods that rely on memory consolidation or task boundaries.

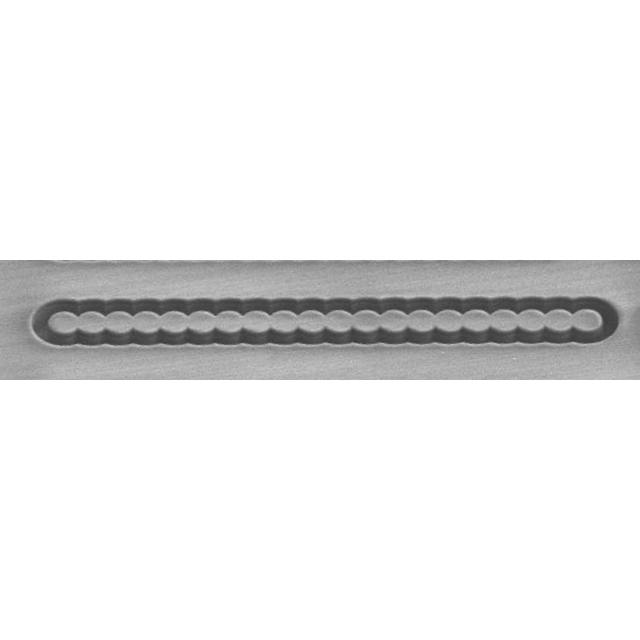

Beyond these results, MESU offers a principled bridge between neuroscience and machine learning, formalizing a biologically inspired way for AI systems to manage continual learning. Our next step is to extend MESU toward hardware-friendly probabilistic models, aiming to make brain-inspired continual learning practical for real-world, low-power AI devices.

References

Bonnet, D., Cottart, K., Hirtzlin, T., Januel, T., Dalgaty, T., Vianello, E. and Querlioz, D., 2025.

Bayesian continual learning and forgetting in neural networks. Nature Communications, 16 (1), p.9614.

https://doi.org/10.1038/s41467-025-64601-w

Affiliations

Centre de Nanosciences et de Nanotechnologies (C2N), CEA-LIST et CEA-LETI

Mots-clés : Machine learning, Artificial Neural Networks, Bayesian Learning, Continual Learning, Metaplasticity, Uncertainty

Figure : Bayesian continual learning and forgetting. Continual learning is a sequential training situation, where several datasets are presented sequentially. In our approach the weights of a neural network follow a probability distribution that approximates a formulation gracefully balancing learning and forgetting, contrary to previous approaches.